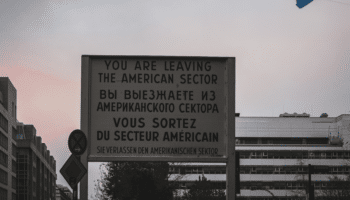

Yesterday we observed what appear to be unrelated incidents in us-west-2 (Oregon) and eu-central-1 (Frankfurt) that gave a number of AWS customers a run for their money as provisioning times of several core services rose to unacceptable levels. It occurred to me that an element of “common wisdom” may not be nearly as common as some of us believe. A cursory check of the internet didn’t show much along these lines, so I figured I’d write down my thoughts and see what the community thought.

Things you should know about Disaster Recovery in AWS

- AWS regions have separate control planes, which generally serve to isolate faults to particular regions. That’s why we don’t see global outages of DynamoDB, but rather region-specific outages of particular services. That said, if us-west-2 has an issue, and your plan is to fail over to us-east-1, you should probably be aware that more or less everyone has similar plans. You’ll be in very good, very plentiful company. That said, some services still appear to have control planes homed through us-east-1. For instance, during last year’s S3 outage in the US east region, other regions worked fine–but you couldn’t provision new buckets until the issue had passed.

- AWS regions can take sudden inrush of new traffic very well; they learned these lessons back in the 2012 era. That said, you can expect provisioning times to be delayed significantly. If your recovery plan includes spinning up EC2 instances, you can expect that part of your build to take the better part of an hour in some cases.

- While a number of naive cost-cutting tools will suggest turning off idle instances, if you’re going to need to sustain a sudden influx of traffic in a new region you’re going to want those instances spun up and waiting; any DR strategy that depends upon the ability to rapidly provision new instances (including as part of autoscaling groups) is likely to end up causing you grief in the event of an actual outage.

None of these are immediately obvious– and they aren’t at all apparent during your standard DR tests. These issues only crop up amid outages that have everyone exercising their DR plans simultaneously.

Disasters are hard; plan accordingly.