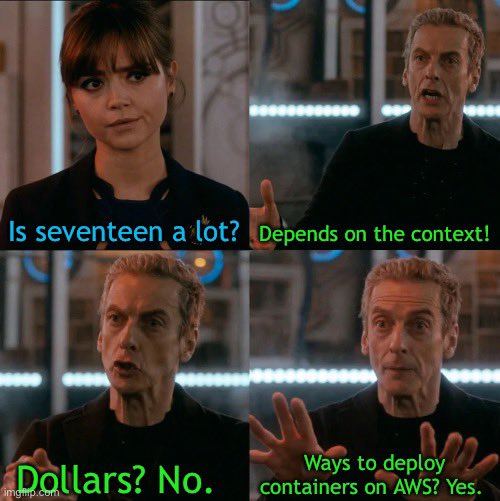

As I mentioned on Twitter last week, there are 17 ways to run containers on AWS. While I pulled the number “17” out of the air, I have it on good faith that this caused something of a “meme explosion” inside of AWS. To that end, I can do no less but to enumerate the 17 container options, along with (and this is where I deviate from AWS itself) providing guidance and commentary as to which you should choose for a given task.

In no particular order, let’s get to:

The 17 Ways to Run Containers on AWS

1. Proton

Proton was announced at re:Invent 2020. It ties together a bunch of tools that you might use to build containerized applications, with an opinionated position on how to build them. Think of this as a way to set “best practices” and push them to the rest of your organization. I’d advise keeping an eye on this; it’s still early days.

2. App Runner

App Runner was announced last week, and is what sparked this whole thing. I’ve tested it myself, and it seems to work best for single-container workloads, or code repositories in JavaScript or Python that you want to shove into a container, then run automatically. I’d wait for other people to define the space before exploring it yourself, but keep aware of it for a while.

3. Lightsail containers

“Isn’t that like Lightsail Containers?” asked someone? Yes! It’s remarkably similar, and a bit more expensive than Lightsail’s container option to boot. Lightsail Containers are probably my goto answer for applications that fit in a single container.

4. Lightsail and 5. EC2

You can always just use virtual machine-like instances and run containers yourself, be it by hand with Docker, or using some orchestration mechanism of your own. This is how some expressions of EKS and ECS work. It’s really not advised as I see the world, but if you’re trying to integrate with something pre-existing, it’s far from your worst option.

6. EKS

When EKS, AWS’s managed Kubernetes service launched, it wasn’t great. That story has changed significantly; it’s faster to spin up than it was at launch, the permissions are marginally better than they used to be (at least, it no longer requires full admin permissions to spin up a cluster), and it can leverage underlying EC2 nodes or Fargate, both of which support Spot for cost purposes. It’s not horrible; if you force me to run Kubernetes, this is likely how I’d go about doing it barring outside constraints.

7. ECS

Amazon’s Elastic Container Service is a Kubernetes alternative, and is what I’d go for if I didn’t need to run Kubernetes itself. It takes a simplified view of how to orchestrate and run containers, and is pretty straightforward with respect to its adoption story. I purchased KubernetesTheEasyWay.com and pointed it to the ECS homepage for this reason–and also because nobody made a bid for me to point it to their offering instead. Yet.

8. ROSA

RedHat OpenShift on AWS is a new offering, and one of a still-small subset of first party AWS services that feature partnerships with other companies (as of this writing, OpsWorks for Chef and Puppet, VMware on AWS / RDS on VMware, Managed Prometheus, and Managed Service for Grafana are the ones I’m aware of; their managed Kafka and Elasticsearch services are apparently not done in conjunction with third parties). I’d use this if I were already running OpenShift in production in my on-prem environment and/or had deep expertise with OpenShift among my staff. Even AWS’s own marketing of this service tells a transitional story, about using this for workloads that are already using OpenShift elsewhere in the environment. If you’re going greenfield, I’d pass on this.

9. AWS IoT Greengrass

Greengrass is an open sourced runtime and cloud service dingus that lets you pay AWS to run Lambda functions and containers on your own devices locally (usually taken to mean embedded devices), while still finding a way to pay AWS for the privilege. This has been around for a while–long enough to have a “we have screwed up” 2.0 release. It has some older-style approaches to things, such as only charging per device per month rather than a bunch of other billing dimensions. I haven’t yet used this service in anger.

10. Codebuild

If you need to run a container on a schedule, it’s hard to go wrong with CodeBuild. While this is imagined as a build/release tool, it’s also the best way to run containers serverlessly, with available container sizes that are far larger than most other services, and to execute a task that previously existed as a cron job. I know it sounds like I’m misusing a service, and I confess it started that way, but the more I look at this application and talk about it with others, the more convinced I am that I inadvertently stumbled onto a novel use for a tool that goes beyond what AWS Marketing envisioned when they told its story.

11. EKS Anywhere and 12. ECS Anywhere

These two services are similar to the above mentioned versions without the “Anywhere” added in, except that they can run in your on-premises environment–or in other cloud providers but for obvious reasons that’s not marketed super well or loudly. I’d consider it as a transitional story or for solidly hybrid deploys–once they come out of preview.

13. Fargate

Fargate is a serverless compute engine that takes the place of “nodes you manage yourself” when used with either ECS or EKS. AWS handles the scaling, placement, and underlying infrastructure issues. Given their support for Spot instances and the fact that Savings Plans now cover their usage, I’m a big fan of the platform. It may be one of the better innovations to come out of AWS’s container group.

14. App2Container

App2Container is a CLI tool that AWS puts out that modernizes both Java and .NET applications. What does “modernization” mean? You guessed it; it shoves them wholesale into containers, generates the ECS or Kubernetes definitions, and calls it a day. Not working with either one of those languages in anger, I couldn’t tell you whether this service is awesome, terrible, or somewhere in between.

15. Copilot

Copilot is a CLI utility that presumably came out of AWS’s container group looking at the official AWS CLI, realizing that getting it to work the way they wanted it to in order to get straightforward tasks done would be a gargantuan effort, giving up entirely, and building this thing from scratch instead. It takes your application and shoves it into ECS, then calls it a day. Given how annoying those services are to work with directly, I’d give this tool a strong thumbs up.

16. Elastic Beanstalk

This was AWS’s attempt at “Heroku but with extra steps” managed application service. You’d point it at your code repository in a wide variety of languages, it would churn for a while, and then it’d run the application for you on top of AWS resources. It predates the container explosion, but has been largely neglected since–with the notable exception of “adding support for Docker images.” I like it, but it doesn’t feel like this is where AWS’s container attention lives these days.

17. AWS Lambda

“Wait, isn’t Lambda their serverless function dingus?” Yes, it very much is–but as of re:Invent 2020, it also supports Docker images as a function packaging mechanism, meaning that you can run your Docker images as Lambda functions–with all the caveats you’d expect from that. That said, it still definitely counts as a way to run containers, but at the cost of significantly blurring the line between Fargate and Lambda as a result.

And there you have it; the 17 ways to deploy containers atop AWS. Some may suit your needs better than others, but at least we now have a single place to point people towards when they’re faced with this agonizing decision.

To summarize:

If you’re running OpenShift already? Use ROSA. If you’re forced to use Kubernetes? EKS. You have a simple container you want to just have AWS manage for you? App Runner. You have a complex application and don’t want to drown in complexity? ECS. I’ll revise these judgements as time goes on.

If you disagree with any of this, I’m on Twitter for your angry retorts.