I’ve been giving Azure a hard time for its security posture lately — and rightfully so!

Therefore, I suspect that today’s screed is going to come as a bit of a shock to some folks, because it’s nothing less than a love letter to an Azure service that has solved a problem I’ve been noodling on for years.

I periodically talk about my homebuilt Last Tweet in AWS Twitter client. It’s hosted in 20 AWS regions, it’s built entirely out of serverless technologies, it’s my CDK testbed application, and (lest this get overlooked!) it’s often what I use when I live-tweet a conference talk with photos of the speaker’s slides or write a thread that’s heavy on the images. What Last Tweet in AWS didn’t do was include alt text for those images.

For those unaware, alt text is meant to convey the “why” of the image as it relates to the context it appears within. It’s read aloud to users by screen reader software. It also displays on the page if the image fails to load.

Now, understand that Last Tweet in AWS is used to post things to Twitter, and one thing that some corners of Twitter get upset about is when people don’t include alt text for their images.

At first, it was easy to dismiss alt text as unimportant. “Oh, that’s just Twitter being Twitter. How many people with blindness or low vision could really be reading my nonsense?” As a bonus, this thought pattern let me avoid doing extra work. But the more I thought about it, and the more I heard about the importance of alt text from people I deeply respect, the more I began to question whether I was wrong. (Spoiler: I was.)

Being inclusive matters. I cannot put it any more clearly than that; I don’t know how to explain why it’s important that you care about other people, but it very much is. I’m not willing to debate this point. If you need a selfish reason to care, remember that we’re all only temporarily abled. If we’re fortunate enough to live long enough, vision is one of the things that deserts all of us, including you.

Gosh dang it, there’s got to be a better way

With accessibility in mind, I enabled the Last Tweet in AWS checkbox that requires alt-text for every image as I attempted to live-tweet a conference talk. It was brutal. I spent more time doing alt text than I did authoring the tweet itself!

Realistically, people are unlikely to potentially double or triple their ongoing workload in the name of accessibility. But as we’ve established, inclusivity matters.

I didn’t know how to solve this problem. And the last thing I expected to solve it with was a Machine Learning® service from, of all companies, Microsoft Azure.

Making Last Tweet in AWS effortlessly inclusive

If you want to experience the accessibility magic that Azure’s Computer Vision has unleashed, use a Twitter account to log into Last Tweet in AWS. (Yes, the permissions it wants are scary; there’s no way to scope that down further and still let it do the things that make it in any way useful due to limitations in Twitter’s API. For what it’s worth, you have my word that I don’t log or retain any credentialed material.)

The “Require Alt Text” and “Auto-Generate Alt Text” options are enabled by default, which sends the image at upload to Azure’s Computer Vision API. The alt text results tend to be of varying quality. One experiment with (my trademark selfie face)[https://twitter.com/QuinnyPig/photo] spit back “a man with his mouth open.” This is directionally acceptable to me; I can always tweak the autogenerated description if necessary to make it more accurately reflect the context in which I’m using the photo.

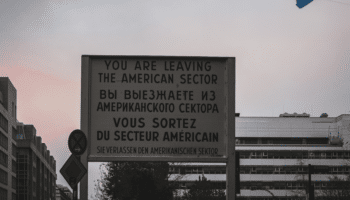

Where the whole thing falls to custard is when the picture is a screenshot of text or a photo of a conference slide when I’m live-tweeting. That’s where the “OCR Mode” toggle comes into play. When it’s set to “Off,” nothing happens. When it’s set to “Always,” it runs every image uploaded through Computer Vision’s optical character recognition functionality, which is similar to Amazon Textract.

The magic breakthrough came from the idea of chaining the two functions together with my OCR “Guess” mode. There are a few phrases in image descriptions that are highly indicative that something should be OCR’d — “a close-up of a machine with text,” “a screenshot of a computer with text,” and so on. When those terms show up, the client then submits the image for OCR via Azure’s Computer Vision Read API. This saves folks with screen-reading software the pleasure of whatever the heck gets output when you upload every image and attempt to OCR a photo of a pineapple.

Finally, Last Tweet in AWS can generate alt text in a sophisticated, low-effort way. It’s an absolute game-changer for making accessibility something I don’t really have to think about when I’m mid-flow of tweeting. Reducing friction is incredibly important if we want to create a change in the world toward more inclusivity.

What I (actually) love about Computer Vision

Dearest Azure,

Your Computer Vision API is, frankly, a joy to work with. Yes, it’s still an Azure offering, so the documentation is insecure in its suggestions and my requests to fix it get closed in the name of usability.

But the capability is so powerful that I’m mystified that I haven’t seen any other public examples of Twitter clients integrating functionality like this.

Cloud economically, Computer Vision isn’t cheap, but it’s also not cost prohibitive. Azure’s pricing is esoteric at best, but for every 1,000 images I have it scan, it charges me either $1 or $1.50. This could conceivably become a challenge if I have to scale out Last Tweet in AWS, but at that point, it wouldn’t be a tremendous challenge to slap Stripe in front of it and charge folks a few bucks a month to use it.

At various times, I’ve been incredibly skeptical of [Microsoft], [Azure], and [Machine Learning®]. I assure you, nobody is more surprised than I to have discovered a hidden gem like Computer Vision lurking within the platform. At a time when we all need to be thinking about the ways that we’ll create a more inclusive and accessible internet, this is the kind of tool more folks should have on hand.

Credit where it’s due: Azure, this thing nailed it.

Hugs and puppies,Corey